What Is Video Moderation? Definition and How It Works

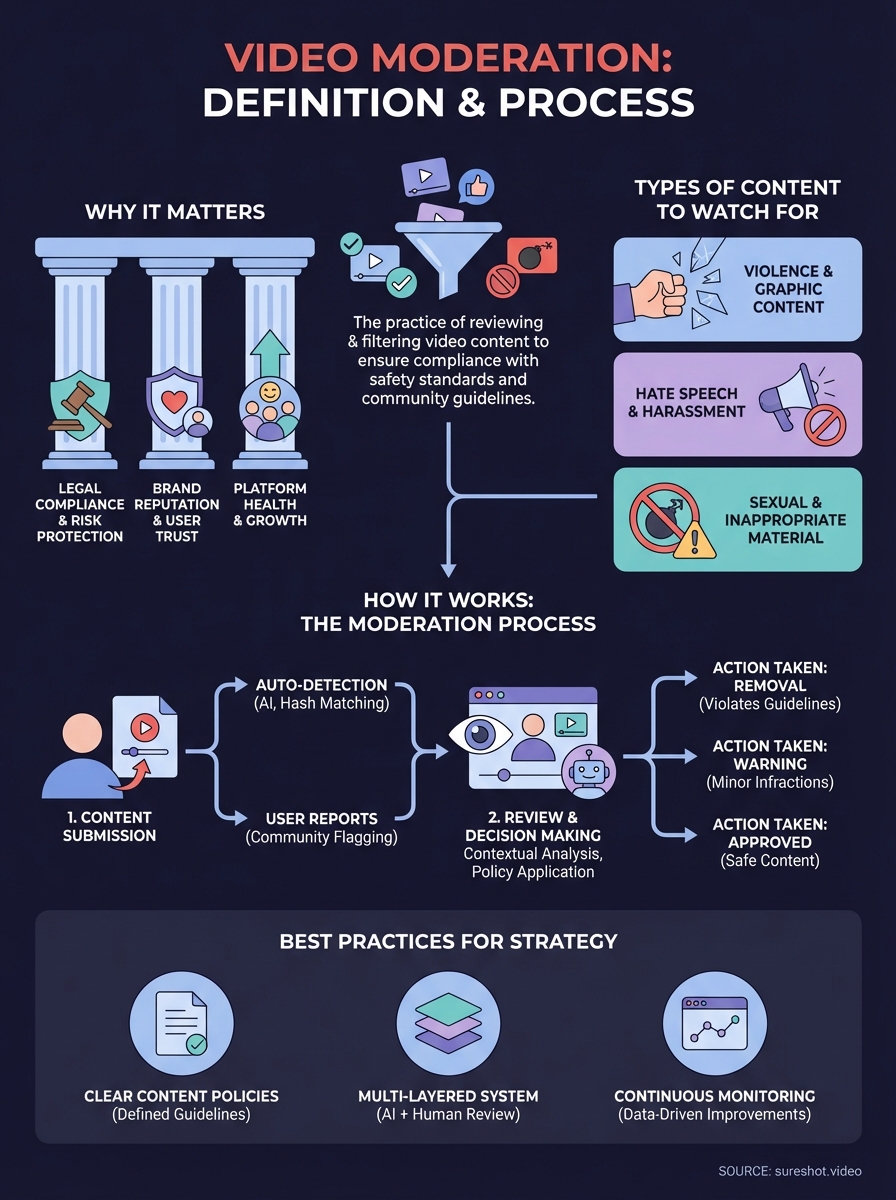

Video moderation is the process of reviewing and filtering video content to ensure it meets safety standards and community guidelines. This practice protects viewers from harmful material like violence, hate speech, explicit content, or misinformation. Whether you run a social media platform, host events with user submissions, or manage any site with video uploads, moderation helps you maintain brand reputation, comply with laws, and create a safe space for your audience.

This guide walks you through everything you need to know about video moderation. You'll learn why it matters for your platform, how the review process actually works, and the differences between manual and automated approaches. We'll cover the specific types of problematic content you should watch for and share practical strategies to build an effective moderation system. By the end, you'll understand how to protect your users while keeping your video content trustworthy and compliant.

Why you need video moderation

Your platform faces serious risks when you allow unfiltered video uploads. Harmful content spreads quickly across digital channels, exposing you to legal liability, brand damage, and user safety concerns. Understanding what is video moderation and implementing it properly protects your organization from these threats while building trust with your audience. You can't rely on users to self-regulate or assume problematic content won't appear on your platform.

Legal compliance and risk protection

You become legally responsible for the content hosted on your platform in many jurisdictions. Regulatory frameworks like the European Union's Digital Services Act require platforms to remove illegal content quickly or face substantial fines. Failure to moderate can expose your organization to lawsuits related to defamation, copyright infringement, or distribution of illegal material. Your moderation system serves as your first line of defense against these legal consequences.

Beyond avoiding penalties, active moderation demonstrates to regulators that you take platform safety seriously. Courts increasingly hold platforms accountable when they ignore obvious risks. Your documentation of moderation efforts can protect you in legal proceedings by showing you acted responsibly.

Brand reputation and user trust

A single viral video containing offensive content can destroy years of brand building in hours. Users expect safe spaces when they interact with your platform, and one bad experience drives them to competitors permanently. Your brand becomes associated with whatever content appears under your name, whether you created it or not. Proactive moderation signals to users that you respect their experience and value their safety.

Platforms with strong moderation policies see higher user retention and engagement because people feel comfortable participating.

Parents won't let children use platforms known for inappropriate content. Advertisers pull funding from sites associated with extremism or violence. Your moderation standards directly impact your revenue streams and growth potential.

Platform health and growth

Unmoderated platforms quickly become unusable as bad actors flood them with spam, scams, and disturbing material. Quality users abandon communities that feel unsafe or chaotic, leaving you with an audience that damages your platform's value. You need moderation to maintain the environment that attracted users initially. Your platform's long-term viability depends on creating spaces where legitimate users want to spend time and contribute positively.

How the moderation process works

Understanding what is video moderation requires looking at the workflow stages that protect your platform. The process follows a systematic approach from the moment a user uploads content until it either appears live or gets removed. Your moderation system combines detection methods, review protocols, and enforcement actions to filter out problematic material before it reaches your audience.

Content submission and flagging

Videos enter your moderation queue through two primary channels. Automatic detection systems scan every upload immediately for known violations using hash matching, keyword recognition, and visual pattern analysis. These systems compare new content against databases of banned material and trigger alerts when they find matches. User reports form the second channel, where community members flag content they believe violates your guidelines. Your platform should make reporting simple and accessible on every video page.

Review and decision making

Your moderation team examines flagged content against your community standards to determine appropriate actions. Reviewers watch the full video, check context, and apply your written policies consistently. They categorize violations by severity, from minor infractions that warrant warnings to serious breaches requiring immediate removal and account suspension. Each decision generates a record that helps train future automated systems and provides evidence if users appeal.

Your moderation workflow should balance speed with accuracy, since delays can allow harmful content to spread while rushed decisions risk removing legitimate videos.

Clear escalation paths ensure complex cases reach experienced moderators who can handle nuanced situations appropriately.

Manual versus automated moderation

Your choice between manual and automated moderation directly impacts your platform's safety standards and operational costs. Each approach brings distinct strengths and weaknesses to understanding what is video moderation means in practice. Most successful platforms combine both methods, using automation for initial screening and human reviewers for complex decisions. Your specific needs depend on your content volume, budget, and the types of videos your users upload.

Manual moderation advantages and limits

Human moderators excel at understanding context and nuance that automated systems miss. They recognize sarcasm, cultural references, and situations where technically rule-breaking content serves educational or newsworthy purposes. Your manual team can spot new types of violations that haven't been programmed into algorithms yet, adapting to emerging threats quickly. However, this approach scales poorly and exposes reviewers to traumatic content that causes psychological harm. Manual review costs significantly more per video and creates bottlenecks during high upload periods.

Automated systems and AI capabilities

Artificial intelligence processes thousands of videos per minute, catching obvious violations before they go live on your platform. These systems identify known terrorist content, copyrighted material, and explicit imagery with high accuracy using image recognition and audio analysis. Your automated tools work continuously without fatigue, maintaining consistent standards across all content.

Automation handles repetitive tasks effectively but struggles with context-dependent situations that require human judgment.

Combining both approaches gives you the best results. Your AI filters catch clear violations instantly while flagging borderline cases for human review, creating an efficient system that balances speed with accuracy.

Types of content to look out for

Your moderation team needs clear guidelines on which content categories require action. Understanding what is video moderation involves recognizing the specific threats that appear on platforms daily. These categories range from obviously illegal material to subtle policy violations that harm your community environment. Your moderators should know exactly what they're screening for to maintain consistent enforcement across all uploads.

Violence and graphic content

Videos depicting real violence, self-harm, or graphic injuries require immediate removal from most platforms. Your system should flag content showing physical attacks, animal cruelty, or disturbing medical procedures that violate your standards. Context matters here, since educational content about violence prevention or news footage might receive exceptions. Your policies should define exactly which violent imagery you allow for documentary or awareness purposes versus content that glorifies harm.

Hate speech and harassment

Content targeting individuals or groups based on protected characteristics violates both legal standards and community trust. Your moderators watch for slurs, dehumanizing language, and calls for discrimination or violence against specific populations. Harassment videos that dox individuals, encourage brigading, or share private information without consent also fall into this category.

Your moderation approach must distinguish between legitimate criticism and coordinated attacks designed to silence or intimidate users.

Sexual and inappropriate material

Explicit sexual content, nudity, and suggestive material involving minors demand zero tolerance policies. Your filters should catch pornographic videos, sexually suggestive content featuring children, and predatory behavior immediately. Many platforms also restrict adult sexual content entirely to maintain advertiser relationships and protect younger users who access your site.

Best practices for your strategy

Your moderation strategy determines how effectively you protect users while keeping legitimate content flowing. Building a robust system requires combining technology, training, and continuous improvement to handle the unique challenges your platform faces. Your approach should scale with growth while maintaining consistent enforcement that users trust. What is video moderation for your platform depends on balancing safety with user experience across every decision point.

Establish clear content policies

Your written guidelines must define exactly what content you allow and prohibit in specific terms. Avoid vague language like "inappropriate material" and instead describe concrete examples of violations with visual references your team can follow. Your policies should cover edge cases and provide decision trees for borderline situations that moderators encounter regularly. Update these documents quarterly to address new violation types and platform changes. Share your public-facing rules prominently so users understand expectations before they upload content.

Build a multi-layered review system

Your moderation workflow should combine automated filters, human review, and escalation protocols that catch problems at different stages. Set up AI tools to screen all uploads immediately for known violations, then route flagged content to trained moderators for final decisions. Create priority tiers so your team handles dangerous content like violence or child exploitation immediately while less urgent violations wait in queue.

Your system works best when automation handles obvious cases while preserving human judgment for complex situations that require context.

Monitor and improve continuously

Track your false positive rates, average review times, and appeal outcomes to identify system weaknesses. Your data reveals which content types slip through filters and where moderators need additional training or clearer guidelines. Regular audits of past decisions help you spot consistency problems before they damage user trust.

Final thoughts on video safety

Your understanding of what is video moderation gives you the foundation to protect your platform and users effectively. Effective moderation combines technology, trained reviewers, and clear policies that evolve with emerging threats. You can't afford to wait until problems appear before implementing these systems, since reputation damage happens faster than you can respond. Your commitment to safety directly impacts user trust, legal compliance, and long-term growth across every aspect of your platform operations.

Platforms handling user-generated video content face unique challenges that require specialized approaches tailored to specific use cases. If you're collecting video submissions from event attendees or managing any type of community content, you need moderation processes that scale with your growing needs. SureShot helps event organizers manage video content safely while leveraging authentic attendee perspectives for promotion. Book a demo to see how our platform handles content collection with built-in safety features that protect your brand and community.